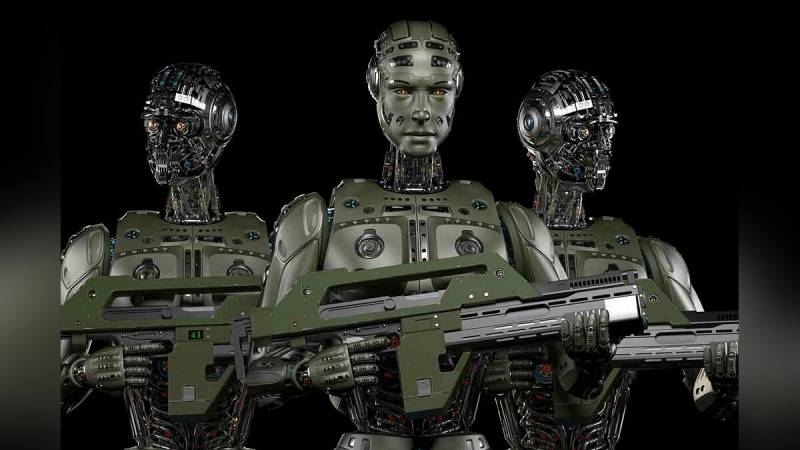

Notes of the human mind: Americans want to change military artificial intelligence

Source: vgtimes.ru

Is digital dehumanization cancelled?

First, a word of warning from military AI fighters:

Pacifists who demand a freeze on all work on combat artificial intelligence are divided into two types. The first is the revised "Terminators" and other analogues. The second - assessing the future according to the modern capabilities of combat robots. First of all, winged unmanned vehicles equipped with strike systems.

There are enough episodes of erroneous or deliberate destruction of civilians by drones. In the Middle East, American drones destroyed more than one wedding ceremony. Flying robot operators identified the celebratory air-to-air shooting as a marker of guerrilla firefights. If a specially trained person is not able to determine the details of the target from several hundred meters, then what can we say about artificial intelligence. At the moment, machine vision, in terms of the adequacy of image perception, cannot be compared with the human eye and brain. Unless he gets tired, but this is also solved by a timely change of operator.

Clouds are clearly gathering over military artificial intelligence. On the one hand, there is more and more evidence of an imminent technological breakthrough in this area. On the other hand, more and more voices are heard in favor of limiting or even banning work in this direction.

A few examples.

In 2016, a petition appeared in which prominent thinkers and thousands of other people demanded that artificial intelligence not be given lethal weapons. Among the signatories are Stephen Hawking and Elon Musk. Over the past seven years, the petition has collected more than 20 signatures. In addition to purely humanistic fears associated with the possibility of uncontrolled destruction of people, there are also legal inconsistencies.

Who will be tried in case of fixing war crimes committed by artificial intelligence? The drone operator who burned down several villages with civilians is easy to find and punish accordingly. Artificial intelligence is a product of the collective work of programmers. It is very difficult to attract one person here. Alternatively, you can judge the manufacturing company, for example, the same Boston Dynamics, but then who will be involved in the production of autonomous drones. Few people will have the desire to be in the dock of the second Nuremberg Tribunal.

Source: koreaportal.com

It is probably for this reason that industrialists and programmers are trying to slow down the development of artificial intelligence combat skills.

For example, in 2018, about two hundred IT companies and almost five thousand programmers pledged not to work on combat autonomous systems. Google claims that in five years they will completely abandon military contracts in the field of artificial intelligence. According to legend, such pacifism is not accidental - programmers, having learned that they were writing codes for military systems, threatened to quit en masse. As a result, they found an amicable option - the existing contracts are being completed, but new ones are not being concluded. It is possible that closer to the date of refusal to work on combat AI, the intractable "programmers" will simply be fired, replacing them with no less talented ones. For example, from India, which has long been famous for its cheap intellectual resources.

Then there is the Stop Killer Robots office, calling on world leaders to sign something like a convention to ban combat AI. So far without success.

All of the above makes military officials look for workarounds. Not even an hour in the elections will win the US President, not only promising universal LGBT grace, but also a ban on the improvement of military artificial intelligence.

Human thinking for AI

The Pentagon appears to be on the cusp of some kind of breakthrough in AI. Or he was convinced of it. There is no other way to explain the emergence of a new directive regulating the humanization of autonomous combat systems. Kathleen Hicks, US Deputy Secretary of Defense, comments:

Have you heard everyone who is in awe of autonomous killer robots? American artificial intelligence will henceforth be the most humane. Just like the Americans themselves.

Source: robroy.ru

The problem is that no one really understands how to instill in robots with weapons the notorious "human judgment regarding the use of force." The exact wording from the Concept updated at the end of last January:

Here, for example, if, when cleaning a house, an attack aircraft first throws a grenade into the room, and then enters itself. Is this human judgment? Of course, and no one has the right to judge him, especially if he previously shouted out “Is there anyone?”. And if an autonomous robot works according to the same scheme?

Human judgment is too broad a concept to be limited in any way. Is the execution of Russian prisoners of war by fighters of the Armed Forces of Ukraine also human thinking?

The addition to Pentagon Directive 3000.09 on autonomous combat systems is full of platitudes. For example,

Before that, apparently, they worked imprudently and not in accordance with the laws of war.

At the same time, there is not a hint of criticism of the Pentagon's January initiative in the American and European press. Under the false humanization of artificial intelligence is nothing more than an attempt to disguise what is happening. Now the US military will have a solid trump card in the fight against opponents of artificial intelligence in the army. Look, we don't have simple AI, but with "the right level of human judgment."

Considering that there is still no clear and generally accepted definition of "artificial intelligence", all the letter-creation around it is perceived with irony. At least.

How to make mathematical algorithms working with large data arrays play human judgments?

This main question is not answered in the updated Directive 3000.09.

Information