Americans call for a ban on the Russian "Poseidon"

Skynet 2.0

The most indicative case when the third world war could start due to the fault of mechanical brains was the incident on September 26, 1983 at the Serpukhov-1 command post near Moscow.

That night, Lieutenant Colonel Stanislav Petrov turned up at the command post, agreeing to replace his colleague. The missile attack siren went off at 00:15 - the alarm was raised by the Oko missile attack early warning system. According to computer data, several ballistic missiles have been launched from the West Coast of the United States and are moving towards the Soviet Union. In total, the country's leadership had no more than 30 minutes to respond.

This, by the way, is very closely intertwined with President Putin's recent words about the strategic depth of Russia's defense. With the entry of Ukraine into NATO, there could be no question of any thirty minutes of reserve for a retaliation strike. But in 1983 the situation was different.

However, Lieutenant Colonel Petrov did not notify his superiors about the missile attack, which most likely saved the planet from total war. The officer's experience suggested that the United States would not launch a preemptive strike with such small forces - ballistic missiles came from only one military base. In the event of a real attack, the Americans would have launched several hundred warheads.

That is why the lieutenant colonel notified the command of the false alarm. It is necessary to understand the degree of responsibility of Petrov, who during the entire time of his service at the facility has never observed a failure of the warning system. Moreover, he himself participated in the development of this technique and knew the degree of its reliability. It only remained to wait for the early warning radars to actually detect the approaching missiles. It worked out - the Oko system mistook the sun glare that unsuccessfully fell on the sensors for the flashes of rocket engines.

Why this story nearly forty years ago? To the fact that automated systems associated with the strategic weaponsare imperfect and may fail. The story of Petrov, who actually saved the world from a nuclear war, is certainly far from the only one - the secrecy regime in both Russia and the United States does not allow such incidents to be disclosed. But if the error of the missile attack warning system can still be somehow corrected, then there will be no one to slow down completely autonomous weapons systems. And the Americans are very worried about this. Especially in the application to the Russian nuclear torpedo "Poseidon".

Blue peacock and others

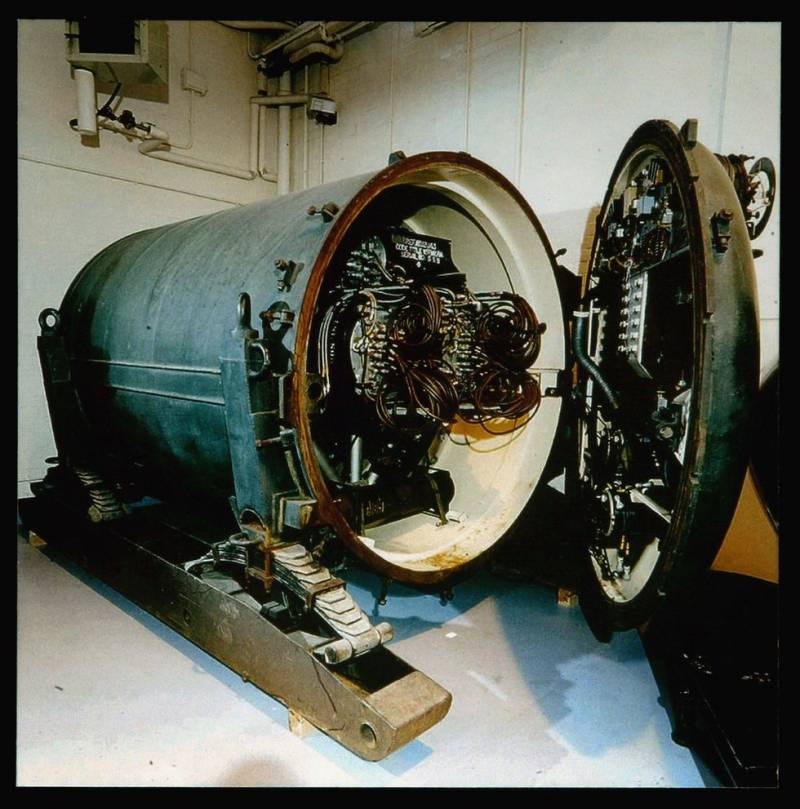

"Poseidon", which the Americans call "underwater drone”, “nuclear torpedo” and even “unmanned bathyscaphe” - this is not the first development of this kind. In the 50s of the last century, the Blue Peacock nuclear mine was developed in the UK. The mass of the device exceeded 7 tons and was supposed to explode in the territories captured by the Soviet troops. There were three ways to cause the mine to detonate - through a multi-kilometer cord, an eight-day timer and an access prohibition device - when you try to disassemble the mine, it explodes.

Such an infernal machine, fortunately, did not find practical implementation in the series, but was remembered for one non-trivial solution. It was proposed to use ... chickens as a timer. Several chicks were placed in a special compartment, equipped with everything necessary for life. The main task of the prisoners of the atomic engineering ammunition was to release natural heat. As soon as the unfortunate chickens died, the temperature dropped and the Blue Peacock exploded. On average, it took about 8-9 days.

By default, this should have happened in the territory occupied by the enemy. For example, it was possible to bury several dozen of these "Peacocks" in the territory of the Federal Republic of Germany in front of the Soviet army. The flawed idea was abandoned, and the prototype of the mine is gathering dust in the British Armory Museum.

Of course, we are not talking about such curious automated systems for controlling nuclear weapons now - first of all, it is proposed to give control to artificial intelligence. Taking into account the constant complication of attack systems, the emergence of hypersonic systems, the time limit for response actions is getting smaller, and electronic algorithms will make it possible to respond more quickly. In the opinion of the Americans, it is better not to bring this to a head and to agree on a ban on autonomous nuclear weapons while on shore. So far, this is only a grassroots initiative from Bulletin of the Atomic Scientists researcher Zachary Kallenborn, but the idea may well be discussed at the state level.

The thing is that the Americans are lagging behind Russia in this direction - at least they think so. They see the Poseidon submersible as a two-megaton underwater nuclear bomb capable of sinking an aircraft carrier group or setting off a fatal tsunami on the West Coast. The fears of the Americans are also connected with the total uncertainty about the degree of autonomy of Poseidon - in the open press, they can only exchange assumptions. But Kallenborn, as the author of the initiative, makes quite strong arguments against the inclusion of AI in nuclear security.

Firstly, the serious level of secrecy of strategic facilities often makes it possible to focus only on satellite data. Simply put, there is not enough data for the confident operation of AI algorithms. Neural networks that are accustomed to operating with a mass of information will produce false positive or false negative results. Adjustments can also be made by weather conditions - cloudiness, snow, rain. If with shock drone or autonomous a tank it can cost a little blood, then in the case of nuclear missiles the consequences will be fatal.

In addition, difficulties arise with the training of "nuclear" AI. What examples to teach him, if the testing of weapons of mass destruction has long been banned, and from the real use only Hiroshima and Nagasaki.

The second reason why Kallenborn does not trust automated systems is the possibility of provocations from terrorists. Imagine that some powerful organization decides to call fire on itself and simulate a nuclear attack. To do this, it is enough to have information about the markers that AI focuses on when recognizing attacks. Electronic brains, deprived of the conditional “lieutenant colonel Petrov”, will take a provocation at face value and strike back. The nuclear conflict between the powers is provoked - the terrorists are satisfied.

And a reverse example. The opposing side, ready to start a world war, can bypass the controlling AI with a simple disguise. Here, two machine intelligences already enter the competition - one from the side of the defender, the second from the side of the attacker. Not everyone is ready for the fate of the world to be decided in such a duel. Fooling AI, as Kallenborn sees, is not very difficult - just shift a few pixels in his picture and an approaching strategic bomber will seem no worse than a dog.

The fears of the Americans are also connected with the capabilities of systems such as the "Dead Hand", which in the Soviet Union was known as the "Perimeter". A system that regulates a retaliatory nuclear strike in case of loss of communication with a higher command center. In the United States, work has already been underway on something similar based on AI algorithms since 2019, and this causes some concern among the authors of the Bulletin of the Atomic Scientists.

Callenborn published his article on February 1, 2022, in which he invited the three largest nuclear powers - Russia, China and the United States to sit down at the negotiating table on the problem of AI in nuclear deterrence. And already this year. Oddly enough, even against the background of the crisis, this does not look crazy - in the emerging new picture of the world, it is precisely such agreements that can be very useful. Only now Russia should conduct these negotiations from a position of strength. In the end, it was the domestic Poseidon that made the overseas establishment so worried.

Information