Deep fake technology as a reason to start a war

New world with deep fake

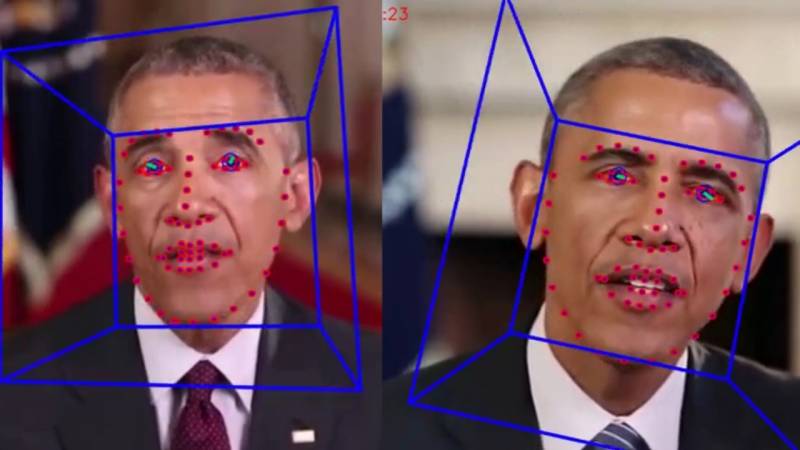

Now almost every iron has the Internet, it is becoming increasingly difficult to find a point on the map where the 4G network would not be available. Broadband Internet is primarily HD video on common platforms that are gradually replacing for us. news feeds, analytics and just entertaining reading. In addition, it is an instrument of influence on billions of people, which allows creating the right public opinion at the moment. At the peak of these games with the public, deep fake technology, which has already proved its ability to turn celebrities such as Gal Gadot, Scarlett Johansson, Taylor Swift, Emma Watson and Katy Perry, into the stars of the porn industry, may turn out to be. Dipfake is an algorithm that allows you to simulate the behavior and appearance of a person in a video. The technology got its name from the combination of deep learning (“deep learning”) and fake (“fake”). At the heart of deep fake are the notorious neural networks operating on the Generative Adversarial Network (GAN). The algorithms laid down in the base of the program constantly compete with each other in two processes: training in the photographs presented in order to create a real change of face for a copy and elimination of unsuitable options until the machine itself begins to confuse the original and the copy. In this complex scheme, the main goal of deep fake is to create false photos and video content in which the face of the original is replaced in a different way. For example, the charismatic US President Donald Trump could well take the place of any odious leader of the XNUMXth century and carry an open heresy to the masses from the rostrum. Now in one of the generated deep fake videos, former President Barack Obama allowed himself to use foul language against Donald Trump.

Of course, at first, the deep fake advertisement was exclusively viral - the faces of Hollywood actresses implanted in uncomplicated plots of porn videos with all the ensuing consequences. Or, for example, actor Nicolas Cage suddenly becomes the main actor in episodes of the most iconic films of our time. Some of these creations are presented on video and, frankly, many of them look somewhat clumsy.

But hip-hop singer Cardi Bee, who appeared on the evening show Jimmy Fallon in the image of actor Will Smith, looks quite convincing.

And here is the original.

Crafts on the Ctrl Shift Face channel look good. For example, Sylvester Stallone tried on the lead role in Terminator.

Already, IT analysts are claiming that fake technology may be the most dangerous in the digital field in recent decades. In addition to this technology (and based on it), specialists from Princeton, Max Planck and Stanford universities built the Neural Rendering application. His task is even more dangerous - to “force” the image of a person to pronounce any text in any language with his voice. This requires only a 40-minute video with sound, during which the neural network will learn how to work with the voice and facial expressions of a person, transforming it for new verbal expressions. Initially, the idea, of course, is positioned as good: the main consumer of Neural Rendering will be film studios who want to reduce the time for shooting acting takes. However, it immediately became clear to everyone that in the near future, virtually any user can generate video fakes on their laptop from which their hair will stand on end. The second branch of the “fake” program was the DeepNude application, which is capable of quite realistic “stripping” any woman naked in the photo. In the early days of the service, the volume of failed requests was so large that the developers, in fear of lawsuits, announced its closure. But hackers hacked the resource, and now DeepNude can be used by everyone. Of course, they try to restrict the service in access, but everyone understands that this is only a temporary measure.

Deep fake turned out to be a good tool in the hands of scammers. One British energy company was robbed of 220 thousand euros, when a “clone” of a manager from Germany got in touch with the financial department. He asked in a simulated voice to urgently transfer money to an account in Hungary, and business partners had no reason not to trust a subscriber. Of course, now massively and efficiently faking a video sequence is problematic - state regulators are constantly blocking resources with fakeapp and facefake, and the power of computers does not yet allow for quick video synthesis. We have to delegate this work to remote paid servers, which require thousands of photos of the original person and the “victim” to work.

Casus belli

Deep fake technology may leave actors without work in the future - the entire industry may well switch to cartoon-like movie heroes, many of whom the technology will rise from the dead. But these are more likely dreams of the future, since numerous trade unions and an elementary lack of computing power pushes the prospect forward several years ahead. Although now in the film “Rogue One: Star Wars”, the director for one episode “resurrected” actor Peter Cushing, who died in 1994. Rumor has it that in the new film about the Vietnam War, the famous James Dean may appear. Neural networks help actors who are already years old look in the frame 10-20 years younger - for example, Arnold Schwarzenegger and Johnny Depp. On average, at least 15-20 thousand deepfake videos are generated every month in the world every month, most of which appear on the Internet. Russian programmers are trying to keep up with global trends - Vera Voice in July 2020 will invite fans of Vladimir Vysotsky’s work to communicate with a digital copy of the singer at the Taganka Theater.

Everything goes to the fact that video and photo evidence will cease to be effective arguments in litigation, and a global video surveillance system will be a waste of money. No one will trust frames from CCTV cameras. Where is the guarantee that this is not a synthesized dummy? In political propaganda, deep fake is already becoming a powerful lever for influencing voters' opinions. California in October 2019 became the first state to ban the posting of videos with political candidates 60 days or less before the election. For violation of this law, AB 730 faces criminal liability. Now several more states have joined this initiative, and in January 2020 it will be forbidden in China to publish synthesized deep fake photos and videos without a special mark. By the way, now one of the effective methods for determining fake by eye is the lack of natural blinking of the synthesized characters.

Now imagine how the development of deep fake technology (and development can not be stopped until there is demand) will turn the idea of truth and lies. Especially when the state structures adopt the novelty. Any synthesized video about the next espionage exposure with skillful use may be the reason at least for imposing another package of sanctions or closing the diplomatic mission. And it will not be necessary to stage chemical attacks on civilians in order to authorize a massive missile attack on a sovereign state. Acts of genocide, consequences of use weapons mass destruction, provocative and abusive behavior of the first persons of the state - all this deepfake bouquet in the right situation can justify the beginning of another military adventure in the eyes of voters. And when the guns speak and the rockets fly, no one will particularly remember with what revealing video the war started.

Now there is no definite answer what to do with this disaster. The best algorithms sharpened by exposing deep fake can guarantee only 97% probability. Currently, every progressive programmer can take part in the Deepfake Detection Challenge, which in September 2019 announced Facebook. A prize fund of $ 10 million will go to someone who can develop an algorithm that allows you to recognize fake videos with a 100% guarantee. One can only guess how quickly the response from the underground deep fake developers will follow.

Information