Artificial Intelligence. Part two: extinction or immortality?

Here is the second part of an article from the series “Wait, how can this all be a reality, why is it still not spoken about at every corner.” In the previous series it became known that an explosion of intellect is gradually creeping towards the people of the planet Earth, it is trying to develop from narrowly focused to universal human intelligence and, finally, artificial superintelligence.

The first part of the article began innocently enough. We discussed the narrowly focused artificial intelligence (AII, which specializes in solving one specific task, such as determining routes or playing chess), in our world it is a lot of it. Then they analyzed why it is so difficult to grow generalized artificial intelligence from UII (AOI, or AI, which, according to intellectual abilities, can be compared to a person in solving any task). We came to the conclusion that the exponential rates of technological progress hint that OII may appear rather soon. In the end, we decided that as soon as the machines reached the human level of intelligence, the following could happen immediately:

As usual, we look at the screen, not believing that artificial super-intelligence (ICI, which is much smarter than any person) can appear already in our lives, and selecting the emotions that would best reflect our opinion on this issue.

Before we delve into the particular ICI, let's remind ourselves what it means for a machine to be super-intelligent.

The main difference lies between fast superintelligence and high-quality superintelligence. Often the first thing that comes to mind at the thought of a super-intelligent computer is that he can think much faster than a person — millions of times faster, and in five minutes will comprehend what a person would need for ten years. (“I know Kung Fu!”)

It sounds impressive, and the ISI really should think faster than any of the people - but the main separating feature will be in the quality of his intellect, and this is quite another. People are much smarter than monkeys, not because they think faster, but because people's brains contain a number of ingenious cognitive modules that make complex linguistic representations, long-term planning, abstract thinking, which monkeys are not capable of. If you disperse a monkey's brain a thousand times, it will not become smarter than us - even after ten years it will not be able to assemble a designer according to the instructions, what a person would need a couple of hours at most. There are things that a monkey never learns, no matter how many hours it spends or how fast its brain works.

In addition, the monkey does not know how humanly, because its brain is simply not able to realize the existence of other worlds - the monkey may know what a man is and what a skyscraper is, but he will never understand that the skyscraper was built by people. In her world, everything belongs to nature, and the macaque not only cannot build a skyscraper, but also understand that anyone can build it at all. And this is the result of a small difference in the quality of intelligence.

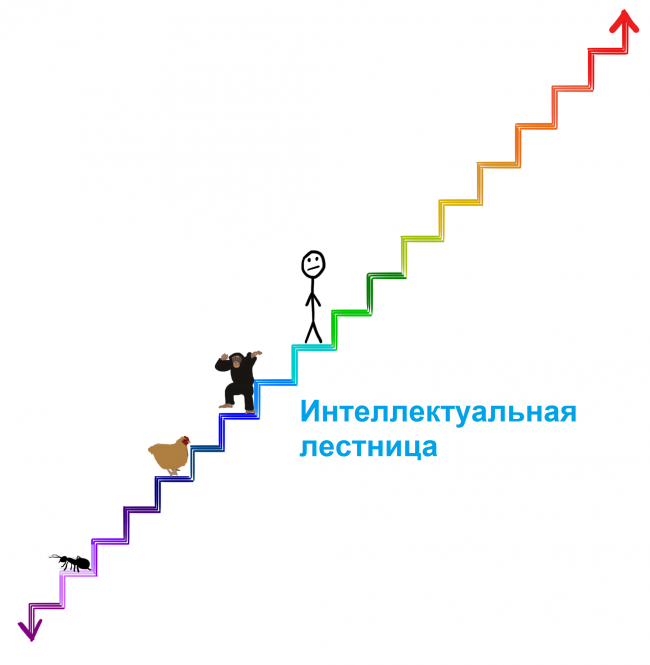

In the general scheme of the intellect we are talking about, or simply by the standards of biological creatures, the difference in the quality of the intelligence of man and a monkey is tiny. In the previous article we placed biological cognitive abilities on the ladder:

To understand how serious a super intelligent machine will be, place it two steps higher than the person on this ladder. This machine may be supramental quite a bit, but its superiority over our cognitive abilities will be the same as ours - over monkeys. And as the chimpanzees never comprehend that a skyscraper can be built, we may never understand what the machine will understand a couple of steps higher, even if the machine tries to explain it to us. But this is just a couple of steps. The car will see ants smarter in us - it will teach us the simplest things from its position for years, and these attempts will be completely hopeless.

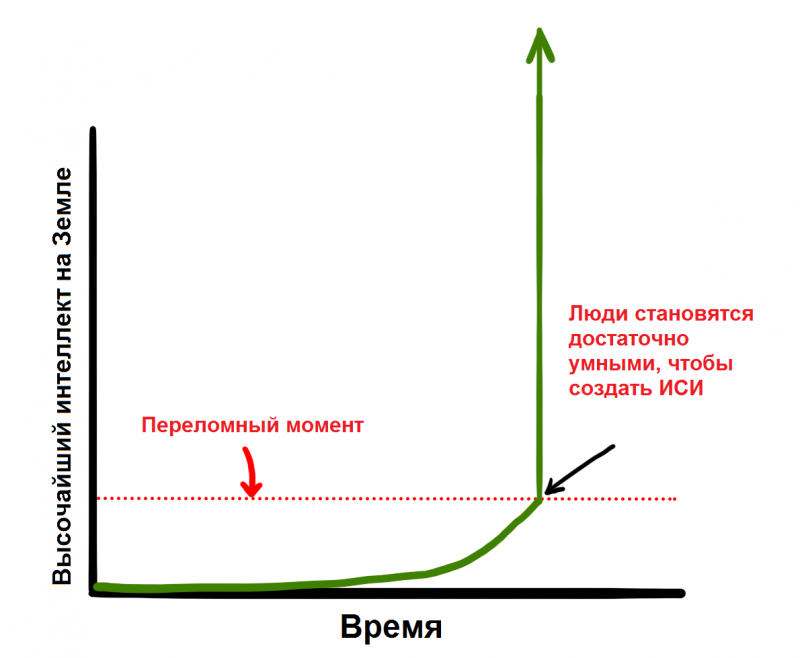

The type of superintelligence that we will talk about today lies far beyond this staircase. This is an explosion of intelligence - when the smarter the machine becomes, the faster it can increase its own intelligence, gradually increasing momentum. Such a machine may take years to surpass the chimpanzees in intelligence, but perhaps a couple of hours to surpass us by a couple of steps. From this point on, the machine can already jump over four steps every second. That is why we should understand that very soon after the first news that the machine has reached the level of human intelligence, we can face the reality of coexistence on Earth with something that will be much higher than us on this ladder (and maybe millions of times higher):

And since we have already established that it is absolutely useless to try to understand the power of a machine that is only two steps higher than us, let us define once and for all that there is no way to understand what ICI will do and what the consequences will be for us. Anyone who claims the opposite simply does not understand what super-intelligence means.

Evolution has slowly and gradually developed the biological brain for hundreds of millions of years, and if humans create a machine with superintelligence, in a sense we will surpass evolution. Or it will be a part of evolution — perhaps evolution is such that intelligence develops gradually until it reaches a turning point that heralds a new future for all living beings:

For reasons that we will discuss later, a huge part of the scientific community believes that the question is not whether we get to this turning point, but when.

Where are we after this?

I think no one in this world, neither I, nor you, can say what happens when we reach a turning point. Oxford philosopher and leading theorist AI Nick Bostrom believes that we can reduce all possible results to two large categories.

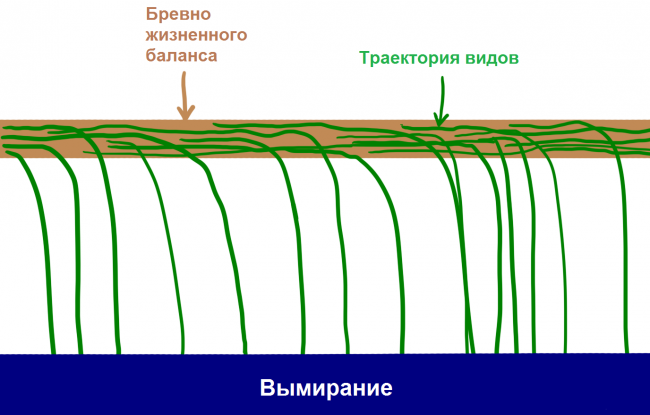

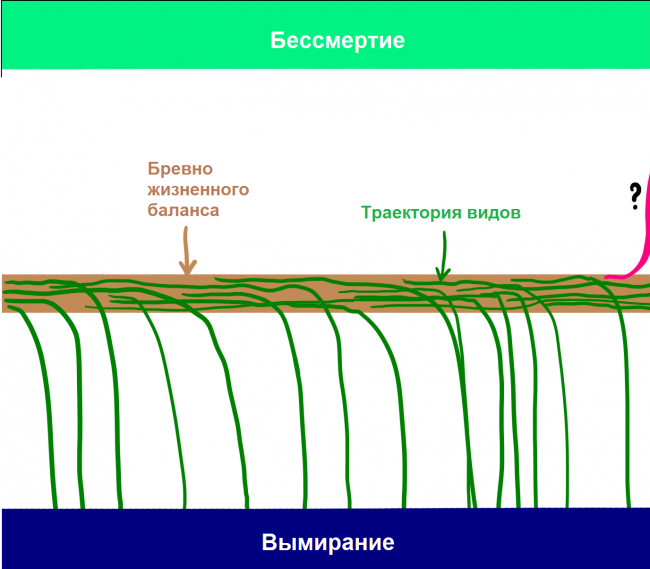

First, looking at historywe know the following about life: species appear, exist for a certain time, and then inevitably fall from the balance of life balance and die out.

“All species are dying out” was as reliable a rule in history as “all people someday die”. 99,9% of species have fallen from a life log, and it is clear that if a certain species stays on this log too long, a gust of natural wind or a sudden asteroid will turn this log upside down. Bostrom calls extinction the state of an attractor - a place where all species balance, so as not to fall where no species has returned from yet.

And although most scientists recognize that ISI will have the ability to doom people to extinction, many also believe that using the capabilities of the ISI will allow individuals (and the species as a whole) to achieve the second state of the attractor - species immortality. Bostrom believes that the immortality of the species is the same attractor as the extinction of the species, that is, if we get to this, we will be doomed to eternal existence. Thus, even if all species had fallen from this stick into the pool of extinction before the current day, Bostrom believes that the log has two sides, and there is simply no such intelligence on Earth that will understand how to fall to the other side.

If Bostrom and others are right, and, judging by all the information available to us, they may very well be, we need to take two very shocking facts:

The appearance of ISI for the first time in history will open up the possibility for the species to reach immortality and fall out of the fatal cycle of extinction.

The emergence of ICI will have such an unimaginably enormous impact that it is likely to push humanity from this log in one direction or the other.

It is possible that when evolution reaches such a turning point, it always puts an end to the relationship of people with the flow of life and creates a new world, with people or without.

This leads to one interesting question that only a lazy person would not ask: when will we get to this turning point and where will he determine us? No one in the world knows the answer to this double question, but many smart people have been trying to understand this for decades. The rest of the article we will find out what they came to.

We begin with the first part of this question: when should we reach a turning point? In other words: how much time is left until the first machine reaches the superintelligence?

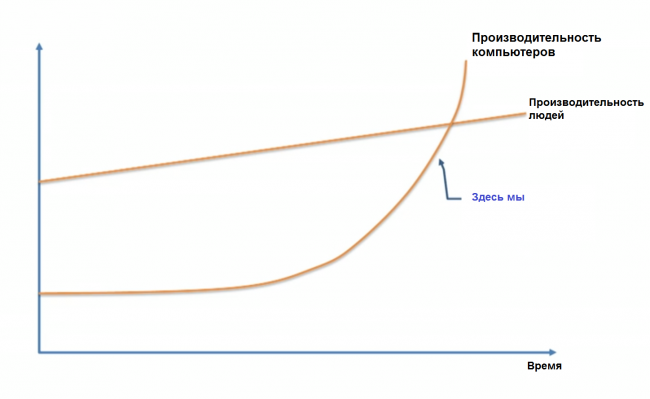

Opinions vary from case to case. Many, including Professor Vernor Vinge, scientist Ben Herzl, co-founder of Sun Microsystems Bill Joy, futurologist Ray Kurzweil, agreed with machine learning expert Jeremy Howard when he presented the following chart on TED Talk:

These people share the opinion that the ISI will appear soon - this exponential growth, which seems slow to us today, will literally explode in the next few decades.

Others like Microsoft co-founder Paul Allen, research psychologist Gary Marcus, computer expert Ernest Davis and technopreneur Mitch Kapor believe that thinkers like Kurzweil seriously underestimate the scale of the problem, and think that we are not so close to a turning point.

Kurzweil Camp objects that the only underestimation that takes place is ignoring exponential growth, and you can compare doubters with those who looked at the slowly burgeoning Internet in 1985 and claimed that it would not affect the world in the near future.

"Doubters" can fend off, saying that progress is more difficult to do each subsequent step when it comes to the exponential development of the intellect, which levels the typical exponential nature of technological progress. And so on.

The third camp, in which Nick Bostrom is located, disagrees neither with the first nor with the second, arguing that a) all this can absolutely happen in the near future; and b) there are no guarantees that this will happen at all or will take longer.

Others, like the philosopher Hubert Dreyfus, believe that all these three groups naively believe that there will be a turning point in general, and also that, most likely, we will never get to ISI.

What happens when we put all these opinions together?

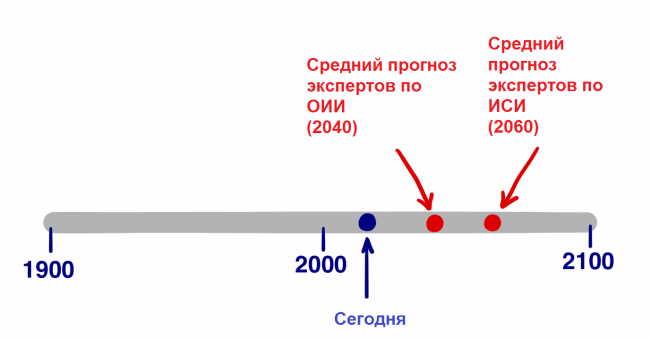

In 2013, Bostrom conducted a survey in which he interviewed hundreds of experts in the field of artificial intelligence during a series of conferences on the following topic: “What is your prediction for achieving a human-level OIH?” And asked to name an optimistic year (in which we will have OII with 10 - percent chance), a realistic assumption (the year in which we have the 50-percent probability of OII) and a confident assumption (the earliest year in which the OII appears with the 90-percent probability). Here are the results:

* Optimistic average year (10%): 2022

* Average realistic year (50%): 2040

* Mean Pessimistic Year (90%): 2075

The average respondents believe that in 25 years we will have more AIS than not. The 90-percent probability of occurrence of OII by 2075 means that if you are still quite young now, this will most likely happen in your lifetime.

A separate study recently conducted by James Barrat (the author of the acclaimed and very good book, Our Last Invention, "excerpts from which I brought to the attention of readers Hi-News.ru) and Ben Herzel at the annual conference devoted to OII, AGI Conference, simply showed people's opinions regarding the year in which we get to OII: to 2030, 2050, 2100, later or never. Here are the results:

* 2030: 42% of respondents

* 2050: 25%

* 2100: 20%

After 2100: 10%

Never: 2%

It looks like the results of Bostrom. In the Barrat survey, more than two thirds of the respondents believe that the OII will be here by the 2050 year, and less than half believe that the OII will appear in the next 15 years. It is also striking that only 2% of respondents, in principle, do not see AIS in our future.

But CSI is not a turning point, like ICI. When, according to experts, we will have ICI?

Bostrom interviewed experts when we reach the ISI: a) two years after reaching the AIS (that is, almost instantly due to an explosion of intelligence); b) in 30 years. Results?

The average view is that the fast transition from the OII to the ISI with the 10-percent probability, but in 30 years or less it will happen with the 75-percent probability.

From this data, we do not know what date respondents would call the 50 percent chance of occurrence of ICI, but based on the two answers above, let's assume that it is 20 years. That is, the world's leading experts in the field of AI believe that the turning point will come in the 2060 year (the OII will appear in the 2040 year + it will take years for the 20 to transition from the OII to the IIS).

Of course, all of the above statistics are speculative and simply represent the opinion of experts in the field of artificial intelligence, but they also indicate that most of the people concerned agree that by the 2060, the ISI is likely to come. In just a few years 45.

We turn to the second question. When we reach a turning point, which side of the fatal choice will determine us?

Superintelligence will be powerful, and the critical question for us will be the following:

Who or what will control this force and what will be its motivation?

The answer to this question will depend on whether the ISI receives an incredibly powerful development, an immeasurably terrifying development, or something between these two options.

Of course, the expert community is trying to answer these questions. The Bostroma survey analyzed the likelihood of the possible consequences of the impact of OII on humanity, and it turned out that with the 52-percent chance everything goes very well and with the 31-percent chance everything goes either bad or extremely bad. The survey attached at the end of the previous part of this topic, conducted among you, dear readers of Hi-News, showed about the same results. For a relatively neutral outcome, the probability was only 17%. In other words, we all believe that the appearance of OII will be a great event. It is also worth noting that this survey concerns the appearance of OII - in the case of ICI, the percentage of neutrality will be lower.

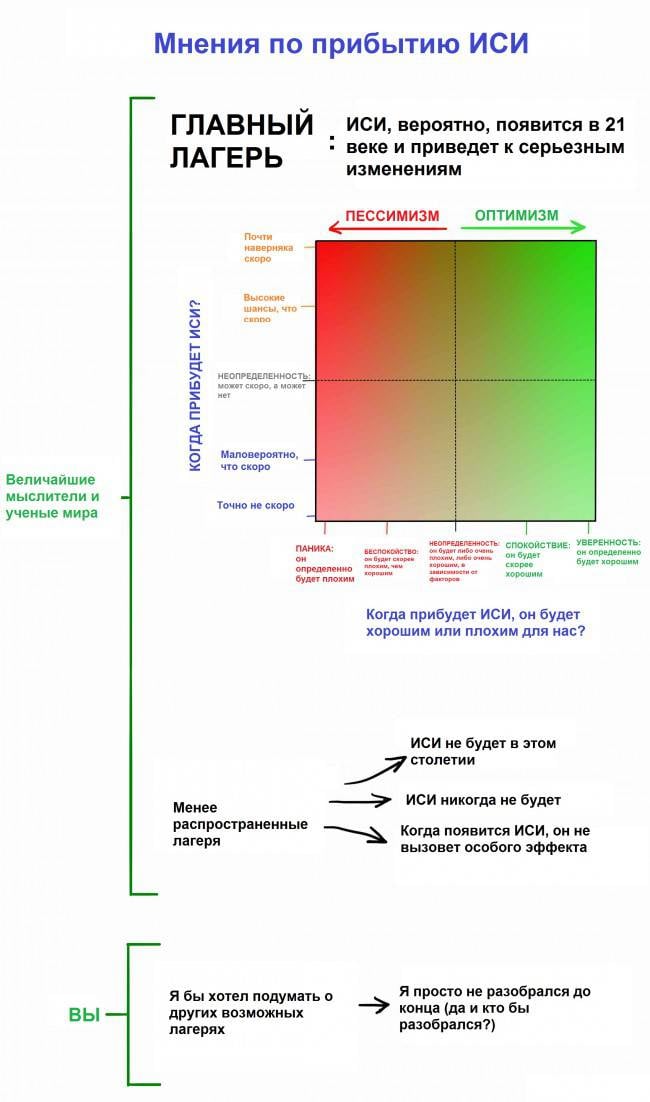

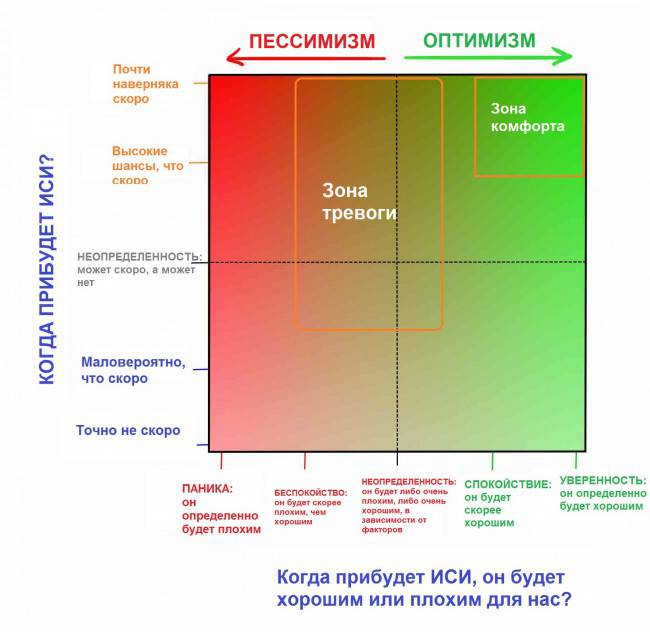

Before we dive further into the discourse on the bad and good sides of the question, let's combine both parts of the question - “when will this happen?” And “good or bad?” Into a table that covers the views of most experts.

We will talk about the main camp in a minute, but first decide on your position. Most likely, you are in the same place as I, before you began to deal with this topic. There are several reasons why people generally do not think about this topic:

* As mentioned in the first part, the films seriously confused people and facts, presenting unrealistic scenarios with artificial intelligence, which led to the fact that we should not take AI at all seriously. James Barratt compared this situation with the fact that the Centers for Disease Control issued a serious warning about vampires in our future.

* Because of so-called cognitive biases, it is very difficult for us to believe in the reality of something, as long as we have no evidence. You can confidently imagine the 1988 computer scientists of the year, who regularly discussed the far-reaching consequences of the appearance of the Internet and what it could become, but people hardly believed that it would change their lives until this actually happened. Computers simply did not know how to do this in 1988, and people just looked at their computers and thought, “Seriously? Is this what the world will change? ” Their imagination was limited by what their personal experience taught them, they knew what a computer was, and it was difficult to imagine what the computer would become capable of in the future. The same thing is happening now with AI. We heard that it will become a serious thing, but since we have not yet confronted him face to face and, on the whole, we see rather weak manifestations of AI in our modern world, it is rather difficult for us to believe that he will radically change our life. It is against these prejudices that numerous experts from all camps, as well as interested people, are trying to get our attention through the noise of everyday collective egocentrism.

* Even if we believed all this - how many times have you thought about the fact that you will spend the rest of eternity in non-existence? A little, agree. Even if this fact is much more important than what you do every day. This is because our brains are usually focused on small everyday things, no matter how crazy the long-term situation we are in. Simply, we are so arranged.

One of the goals of this article is to take you out of the camp called “I like to think about other things” and put experts in the camp, even if you just stand at the crossroads between the two dotted lines on the square above, being completely undecided.

In the course of the research it becomes obvious that the opinions of most people quickly go in the direction of the “main camp”, and three-quarters of the experts fall into two subcamps in the main camp.

We will fully visit both of these camps. Let's start with fun.

Why the future may be our greatest dream?

As we explore the world of AI, we discover surprisingly many people in the comfort zone. People in the upper right square are buzzing with excitement. They believe that we will fall on the good side of the log, and are also confident that we will inevitably come to this. For them, the future is only the best that one can dream of.

The point that distinguishes these people from other thinkers is not that they want to be on the happy side - but that they are sure that it is she who is waiting for us.

This confidence comes out of controversy. Critics believe that it comes from blinding excitement, which overshadows the potential negative aspects. But supporters say that gloomy forecasts are always naive; technologies continue and will always help us more than harm.

You have the right to choose any of these opinions, but put aside skepticism and take a good look at the happy side of the balance log, trying to accept the fact that everything you read may have already happened. If you showed hunter-gatherers our world of comfort, technology and infinite abundance, they would have seemed like a magical fiction to them - and we behave quite modestly, unable to admit that the same incomprehensible transformation awaits us in the future.

Nick Bostrom describes three ways in which the supramental system of artificial intelligence can go:

* An oracle that can answer any precisely posed question, including complex questions that people cannot answer - for example, “how to make a car engine more efficient?”. Google is a primitive oracle.

* The genie who will execute any high-level command — uses molecular assembler to create a new, more efficient version of the car engine — and will wait for the next command.

* A sovereign who will have wide access and the ability to function freely in the world, making his own decisions and improving the process. He will invent a cheaper, faster and safer way to travel privately than a car.

These questions and tasks, which seem difficult for us, will seem like a supermind system if someone asked to improve the situation “my pencil dropped from the table”, in which you would simply lift it and put it back.

Eliezer Yudkovsky, an American expert in artificial intelligence, well noticed:

There are many impatient scientists, inventors and entrepreneurs who have chosen a zone of confident comfort on our table, but for a walk to the best in this best of worlds, we need only one guide.

Ray Kurzweil causes twofold sensations. Some idolize his ideas, some despise him. Some stay in the middle - Douglas Hofstadter, discussing the ideas of the books of Kurzweil, eloquently noted that "it is as if you took a lot of good food and a little dog poop, and then mixed everything so that it is impossible to understand what is good and what is bad."

Whether you like his ideas or not, it is impossible to pass by them without a shadow of interest. He began to invent things as a teenager, and in the following years he invented several important things, including the first flatbed scanner, the first text-to-speech scanner, the well-known Kurzweil musical synthesizer (the first real electric piano), and the first commercially successful speech recognizer. He is also the author of five acclaimed books. Kurzweil is appreciated for his bold predictions, and his “track record” is quite good - at the end of 80, when the Internet was still in its infancy, he suggested that by 2000 years the Network would become a global phenomenon. The Wall Street Journal called Kurzweil a “restless genius,” Forbes, a “global thinking machine,” Inc. Magazine is “the legitimate heir of Edison,” Bill Gates is “the best of those who predict the future of artificial intelligence.” In 2012, Google’s co-founder Larry Page invited Kurzweil to the post of technical director. In 2011, he co-founded Singularity University, which sheltered NASA and which is partly sponsored by Google.

His biography matters. When Kurzweil talks about his vision of the future, it looks like crazy crazy, but the really crazy thing about this is that he is far from crazy - he is an incredibly intelligent, educated and sensible person. You may think that he is mistaken in forecasts, but he is not a fool. Kurzweil's forecasts are shared by many experts in the “comfort zone,” Peter Diamandis and Ben Herzel. That is what will happen in his opinion.

Chronology

Kurzweil believes that computers will reach the level of general artificial intelligence (OII) by 2029, and by 2045 we will not only have an artificial superintelligence, but also a completely new world - the time of the so-called singularity. His chronology of AI is still considered outrageously exaggerated, but over the last 15 years, the rapid development of systems of narrowly focused artificial intelligence (AII) has led many experts to switch to Kurzweil. His predictions still remain more ambitious than in the Bostrom survey (OII to 2040, IIS to 2060), but not by much.

According to Kurzweil, the singularity of 2045 of the year leads to three simultaneous revolutions in the fields of biotechnology, nanotechnology and, more importantly, AI. But before we continue - and nanotechnologies are continuously following artificial intelligence, let's take a minute to nanotechnologies.

A few words about nanotechnology

We usually call nanotechnology technology that deals with the manipulation of matter within 1-100 nanometers. A nanometer is one billionth of a meter, or a millionth of a millimeter; within 1-100 nanometers, you can fit viruses (100 nm across), DNA (10 nm wide), hemoglobin molecules (5 nm), glucose (1 nm), and more. If nanotechnologies ever become subservient to us, the next step will be manipulations with individual atoms that are the least one order of magnitude (~, 1 nm).

To understand where people encounter problems, trying to control matter on such a scale, let's move on to a larger scale. The International Space Station is located 481 a kilometer above the Earth. If people were giants and touched the ISS with their heads, they would be 250 000 times more than they are now. If you increase something from 1 to 100 nanometers in 250 000 times, you will get an 2,5 centimeter. Nanotechnology is the equivalent of a person with an ISS high orbit trying to control things the size of a grain of sand or eyeball. To get to the next level — control of individual atoms — the giant will have to carefully position objects with a diameter of 1 / 40 of a millimeter. Ordinary people will need a microscope to see them.

Richard Feynman spoke about nanotechnology for the first time in 1959. Then he said: “The principles of physics, as far as I can tell, do not speak against the possibility of controlling things atom by atom. In principle, a physicist could synthesize any chemical substance recorded by a chemist. How? By placing the atoms where the chemist says to get the substance. ” This is all simplicity. If you know how to move individual molecules or atoms, you can do almost everything.

Nanotechnologies became a serious scientific field in 1986, when engineer Eric Drexler presented their fundamentals in his fundamental book “Machines of Creation”, however Drexler himself believes that those who want to learn more about modern ideas in nanotechnology should read his book 2013 of the year “ Total abundance ”(Radical Abundance).

A few words about the "gray goo"

Deepen in nanotechnology. In particular, the theme of "gray goo" is one of the not the most pleasant topics in the field of nanotechnology, which cannot be said about. In the old versions of the theory of nanotechnology, a nanoscale method was proposed, involving the creation of trillions of tiny nanorobots that would work together to create something. One of the ways to create trillions of nanorobots is to create one that can reproduce itself, that is, from one — two, from two — four, and so on. During the day several trillions of nanorobots will appear. Such is the power of exponential growth. Funny isn't it?

It's funny, but exactly until it leads to an apocalypse. The problem is that the power of exponential growth, which makes it quite convenient to quickly create a trillion nanobots, makes self-replication a terrible thing in perspective. What if the system shuts down, and instead of stopping replication on a couple trillion, nanobots will continue to multiply? What if this whole process is carbon dependent? Earth's biomass contains carbon atoms 10 ^ 45. The nanobot must consist of the order of 10 ^ 6 carbon atoms, so 10 ^ 39 nanobots will devour all life on Earth, and this will happen in just 130 replications. An ocean of nanobots ("gray goo") will flood the planet. Scientists think that nanobots can replicate in 100 seconds, which means that a simple mistake can kill all life on Earth in just 3,5 hours.

It may be worse - if nanotechnologies are reached by the hands of terrorists and unfavorable specialists. They could create several trillions of nanobots and program them to spread silently around the world in a couple of weeks. Then, one click of a button, in just 90 minutes they will eat everything at all, no chance.

Although this horror story has been widely discussed for many years, the good news is that this is just a horror story. Eric Drexler, who coined the term “gray goo,” recently said the following: “People love horror stories, and this one is in the category of scary stories about zombies. This idea itself is already eating brains. ”

After we get to the bottom of nanotechnology, we can use them to create technical devices, clothing, food, bioproducts - blood cells, fighters against viruses and cancer, muscle tissue, etc. - anything. And in the world that uses nanotechnology, the cost of the material will no longer be tied to its shortage or complexity of the manufacturing process, but rather to the complexity of the atomic structure. In the world of nanotechnology, a diamond can become a cheaper eraser.

We are not close yet. And it is not entirely clear, we underestimate or overestimate the complexity of this path. However, everything goes to the fact that nanotechnology is not far off. Kurzweil suggests that by the 2020 years we will have them. The world states know that nanotechnologies can promise a great future, and therefore invest many billions in them.

Just imagine what possibilities a super-intelligent computer will get if it gets to a reliable nanoscale assembler. But nanotechnology is our idea, and we are trying to ride it, it's difficult for us. What if for the ISI system they are just a joke, and the ISI itself will come up with technologies that will be at times more powerful than anything that we generally can assume? We agreed: no one can assume what artificial artificial intelligence will be capable of? It is believed that our brains are unable to predict even the minimum of what will be.

What could AI do for us?

Armed with super-intelligence and all the technologies that super-intelligence could create, ICI will probably be able to solve all the problems of humanity. Global warming? ISI will first stop carbon emissions by inventing a host of efficient ways to produce energy that is not associated with fossil fuels. Then he will come up with an effective, innovative way to remove excess CO2 from the atmosphere. Cancer and other diseases? Not a problem - health care and medicine will change in a way that is impossible to imagine. World hunger? ICI will use nanotechnology to create meat that is identical to natural, from scratch, real meat.

Nanotechnologies will be able to turn a pile of garbage into a vat of fresh meat or other food (not necessarily even in a familiar form — imagine a giant apple cube) and spread all this food around the world using advanced transportation systems. Of course, it will be great for animals that no longer have to die for food. ICI can also do a lot of other things like saving endangered species or even returning already extinct from stored DNA. The CII can solve our most difficult macroeconomic problems — our most difficult economic debate, ethics and philosophy, world trade — all this will be painfully obvious to CII.

But there is something special that CII could do for us. Alluring and teasing that would change everything: CII can help us deal with mortality. Gradually grasping the possibilities of AI, you may also reconsider all your ideas about death.

Evolution had no reason to extend our lifespan longer than it is now. If we live long enough to give birth and raise children to the point where they can stand up for themselves, this evolution is enough. From an evolutionary point of view, 30 + has enough years to develop, and there is no reason for mutations that prolong life and reduce the value of natural selection. William Butler Yates called our species "soul attached to a dying animal." Not very fun.

And since we all die someday, we live with the thought that death is inevitable. We think about aging with time - continuing to move forward and not being able to stop this process. But the thought of death is treacherous: captured by it, we forget to live. Richard Feynman wrote:

“There is a wonderful thing in biology: there is nothing in this science that would speak about the need for death. If we want to create a perpetual motion machine, we understand that we have found enough laws in physics, which either indicate the impossibility of this, or that the laws are wrong. But in biology there is nothing that would indicate the inevitability of death. This leads me to believe that it is not so inevitable, and it remains only a matter of time before biologists find the cause of this problem, this terrible universal disease, it will be cured. ”

The fact is that aging has nothing to do with time. Aging is that the physical materials of the body wear out. Parts of the car also degrade - but is this aging inevitable? If you repair the car as parts wear, it will work forever. The human body is no different - just more complicated.

Kurzweil talks about intelligent, Wi-Fi-connected nanobots in the bloodstream that could perform countless human health tasks, including regular repair or replacement of worn cells in any part of the body. If you improve this process (or find an alternative proposed by a more intelligent ICI), it will not only keep your body healthy, it can reverse aging. The difference between the body of the 60-year-old and the 30-year-old lies in a handful of physical moments that could be corrected with the right technology. ISI could build a car that a person would visit as a 60-year old, and get out an 30-year old.

Even a degrading brain could be updated. ISI would surely know how to do this without affecting the brain data (personality, memories, etc.). The 90-year-old, suffering from complete brain degradation, could retrain, upgrade, and return to the beginning of his life career. This may seem absurd, but the body is a handful of atoms, and the CID probably could easily manipulate them with any atomic structures. All is not so absurd.

Kurzweil also believes that artificial materials will be integrated into the body more and more as time moves. To begin with, the organs could be replaced by super-advanced machine versions that would work forever and never fail. Then we could make a complete body redesign, replace red blood cells with ideal nanobots that would move on their own, eliminating the need for a heart in general. We could also improve our cognitive abilities, start thinking billions faster and gain access to all the information available to humanity using the cloud.

The possibilities for comprehending new horizons would be truly limitless. People have managed to endow sex with a new appointment; they are engaged in it for pleasure, and not just for reproduction. Kurzweil thinks we can do the same with food. Nanobots could deliver perfect nutrition directly into the cells of the body, allowing unhealthy substances to pass through the body. Nanotechnology theorist Robert Freitas has already developed a replacement for blood cells, which, when implemented in a human body, may allow him not to breathe for 15 minutes - and this was invented by man. Imagine when the power gets ICI.

After all, Kurzweil believes that people will reach a point when they become completely artificial; the time when we will look at biological materials and think about how primitive they were; the time when we will read about the early stages of human history, marveling at how microbes, accidents, illnesses or just old age could kill a person against his will. In the end, people will defeat their own biology and become eternal - this is the way to the happy side of the balance beam, which we are talking about from the very beginning. And the people who believe in it are also sure that this future awaits us very, very soon.

You certainly will not be surprised that the ideas of Kurzweil drew severe criticism. His singularity in the 2045 year and the subsequent eternal life for people were called the “Ascension of Nerds” or “Reasonable Creation of People with IQ 140” Others questioned the optimistic timeframe, the understanding of the human body and brain, reminded him of Moore's law, which does not go anywhere yet. For every expert who believes in the ideas of Kurzweil, there are three who believe that he is mistaken.

But the most interesting thing about this is that the majority of experts who disagree with him do not generally say that this is impossible. Instead of saying “nonsense, this will never happen,” they say something like “it all happens if we get to ISI, but that’s just the catch.” Bostrom, one of the recognized AI experts warning about the dangers of AI, also recognizes:

“It is unlikely that there will be at least some problem that the superintelligence cannot solve or at least help us solve. Diseases, poverty, environmental destruction, the suffering of all kinds - all this super-intelligence with the help of nanotechnology will be able to solve in a moment. Also, superintelligence can give us unlimited lifespan by stopping and reversing the aging process, using nanomedicine or the ability to load us into the cloud. Superintelligence can also create opportunities for an infinite increase in intellectual and emotional possibilities; he can assist us in creating a world in which we will live in joy and understanding, approaching our ideals and regularly fulfilling our dreams. ”

This is a quote from one of Kurzweil’s critics, however, recognizing that all this is possible if we succeed in creating a secure ISI. Kurzweil simply determined what artificial intelligence should be, if it ever becomes possible. And if he is a good god.

The most obvious criticism of the supporters of the “comfort zone” is that they can be damn wrong when assessing the future of the ICI. In his book, Singularity, Kurzweil devoted 20 pages from 700 to potential ISI threats. The question is not when we get to the ISI, the question is what will be his motivation. Kurzweil responds to this question with caution: “The ISI arises from many disparate efforts and will be deeply integrated into the infrastructure of our civilization. In fact, it will be closely integrated into our body and brain. It will reflect our values, because it will be one with us. ”

But if the answer is, why are so many smart people in this world worried about the future of artificial intelligence? Why does Stephen Hawking say that the development of ICI "can mean the end of the human race"? Bill Gates says that he "does not understand people who are not concerned" by this. Elon Musk fears that we "call on the demon." Why do many experts consider ISI the biggest threat to humanity?

This we will talk about next time.

Based on waitbutwhy.com, compiled by Tim Urban. The article uses materials from Nick Bostrom, James Barrat, Ray Kurzweil, Jay Niels-Nilsson, Stephen Pinker, Vernor Vinge, Moshe Vardy, Russ Roberts, Stuart Armstroh and Kai Sotal, Susan Schneider, Stuart Russell and Peter Norwig Tete, Tete, Tete, Armstrong, Tee Sthal, Schneider, Stewart Russell, Peter Norwig, Tete, Tete, Armstrong Marcus, Carl Schulman, John Searle, Jaron Lanier, Bill Joy, Kevin Keli, Paul Allen, Stephen Hawking, Kurt Andersen, Mitch Kapor, Ben Herzel, Arthur Clarke, Hubert Dreyfus, Ted Greenwald, Jeremy Howard.

Information